AI in Mind: The New, Smarter Way to Train Your Big Honkin Model

Google's AI research team releases some exciting new results

I’ve recently become more interested in Artificial Intelligence (AI) in addition to more conventional approaches to psychology, so I’m going to start mixing in some writing about that. I’ll label it in the title with “AI in Mind” if you want to flag (or ignore!) it. Here are a couple of earlier pieces I did on AI over at Psychology Today.

The Problem

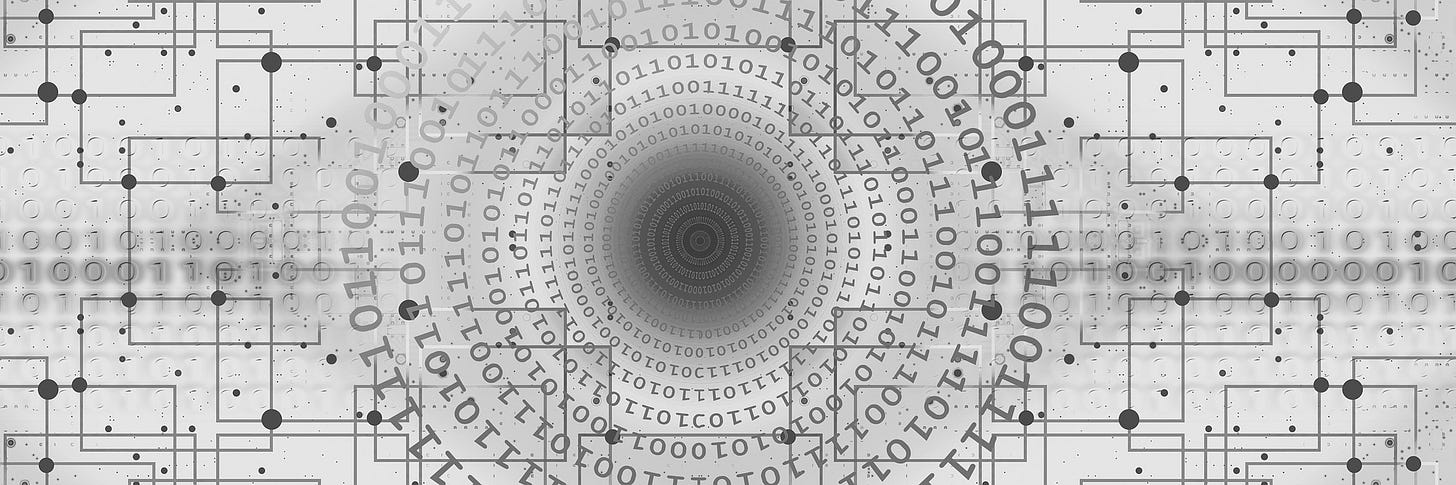

How do you teach a computer to do something really complicated, like learning to write in a particular style or extract some meaning from texts it reads? The answer that a lot of AI researchers have been betting on, especially the influential company OpenAI, is to have a lot more computational power in their models. The high level synopsis is: neural network models have become a lot better at generating and understanding language in recent years by just becoming a lot larger. By larger, I mean that the model uses more numbers to represent human language. The figure at the top of this article shows how, from 2018 to 2022 AI models of language have jumped from a million to a trillion parameters–meaning that the best models now use around a trillion numbers to represent language. It’s a lot of numbers.

Training neural networks, however, is a bit of an art. There are lots of small ways that a model can be tweaked (i.e., ways those trillion numbers can be combined), and there isn’t necessarily a principled way to determine the best way to organize the model. So the state-of-the-art approach is to just experiment a little with different ways of putting the model together and see which one works best. It’s a test-and-see approach that relies on rules of thumb.

One big problem is that the test-and-see approach is hard when your model is learning a trillion parameters. The process of training the model on those trillion parameters can take days or weeks. If you then want to try, say, 10 to 20 different versions of the model, each tweaked slightly, you’re now making your project take 10 to 20 times as long. If each version of the model takes a week, you could suddenly be extending your timeline by months. That’s where Google’s latest breakthrough comes in.

The Solution

Google created a new technique, called 𝞵-Parameterization (which they call “mu-P” or 𝞵P), that allows you to figure out the best way to set up a big complicated model (the best way to tune its hyperparameters, in the insider language) by testing out much smaller models. In other words, you could figure out the best way to set up your HUGE model using a much smaller version. In their paper, for example, they find a better way to set up a 350 million parameter model by just testing a bunch of 13 million parameter models. They also find a better way to set up a 6.7 billion parameter model using just a 40 million parameter model.

This is a breakthrough, because before this researchers thought that the best setup (aka the best tuning) depended on the model’s size. In fact, some tests of the default method for setting up neural networks in a popular framework (PyTorch) showed that specific tunings had different effects if the network was larger or smaller. The Google team came up with a specific way of setting up the tuning process so that what you saw at a small scale would also apply at a large scale. This specific setup is 𝞵P, and having it allows you to do 𝞵Transfer: taking what you learned on a small network, and applying it to a big one.

As an analogy: imagine you are tuning a bunch of guitars, each of different sizes. Normally, you have to twist the tuning pegs at the head of the guitar a different number of times to tune each one. That’s not so bad, but one of your guitars is really big, and each twist is a real pain to make. So now someone says “hey, I can just give you exact instructions on how to tune that big guitar. You just do two and a half twists to the first peg, one and a quarter to the second, …” (and so on). Now you can tune that big guitar way more easily!

In their work, the Google team found that their new method for tuning models was about an order of magnitude (10X) more efficient than the previous convention. Their results also lead them to believe the gains will be even larger when working with bigger, more complex models. So they teamed with OpenAI to evaluate one of their huge, 175 billion parameter models. In their tests, they beat the previous best result using only 7% as much computation on tuning.

Going Forward

Overall, this effort matches two of the criteria for doing high quality science. First, it is important for developing an underlying theory of how to train neural networks. The 𝞵P approach suggests a way of finding the best way of tuning a model that doesn’t just rely on “we tried a bunch of things and this one seemed to work best.” It gives a method for identifying what those best tunings will be.

Second, it has a large practical importance. People use large models of language for a lot of practical applications, such as generating labels of images, helping to write code, and even automatically generating sports commentary. The Google team released a Python package, called mup, that can be used to improve these models right away. So the organizations that use algorithms to process text should be able to apply this result to do their jobs better.

In general, this Google breakthrough exemplifies the kind of scientific achievement I believe psychologists should be pursuing. In situations where we’re just using a rule-of-thumb about the best way to do something (“what’s the best way to measure depression?” or “what’s the best way to get people to increase empathy?”), you should be able to do some deep research to develop a deeper theory. That theory can then tell you with more precision and certainty how to do that thing. You can move from relying on “that looks right” to “we have a good, well-tested reason to believe that this specific way really is right.” And that’s what good knowledge development looks like.